Shared ChatGPT Links Can Expose Sensitive Data

August 22, 2025

When ChatGPT conversations are shared publicly, sensitive inputs like credentials, code, or documents become accessible, creating serious organizational security risks.

When ChatGPT conversations are shared publicly, sensitive inputs like credentials, code, or documents become accessible, creating serious organizational security risks.

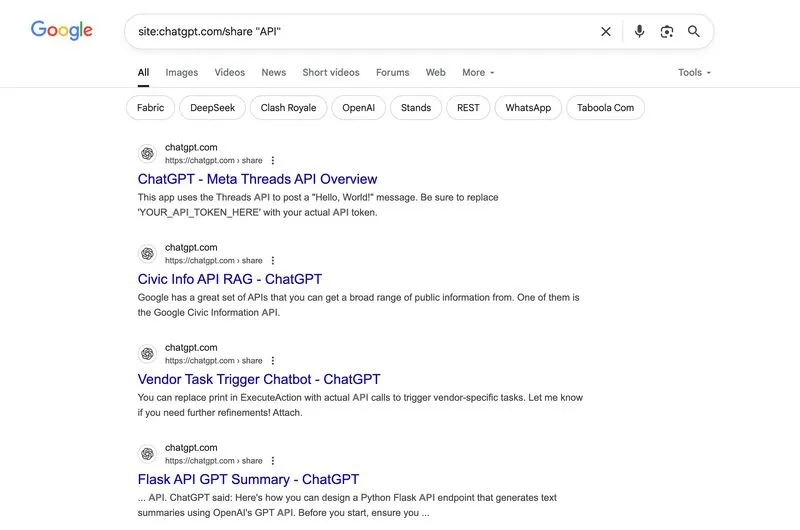

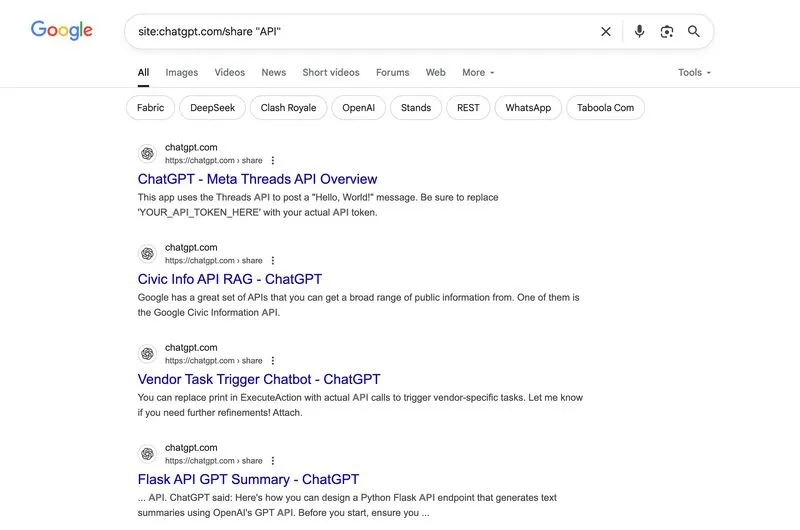

That’s huge - any ChatGPT conversation that’s shared via a link becomes publicly accessible. And many folks just share tons of sensitive / personal info in their chats - login credentials, source code, API keys, internal docs, and other stuff.

And THIS brings tons of security risks for organizations.

Just stop feeding private data to AI platforms - don't expose yourself or the companies you work for.